nrf

Covariant spatio-temporal receptive fields for neuromorphic computing

Neuromorphic computing exploits the laws of physics to perform computations, similar to the human brain. If we can “lower” the computation into physics, we achieve extreme energy gains, up to 27-35 orders of magnitude. So, why aren’t we doing that? Presently, we lack theories to guide efficient implementations. We can build the circuits, but we don’t know how to combine them to achieve what we want. Current neuromorphic models cannot compete with deep learning.

Here, we provide a principled computational model for neuromorphic systems based on tried-and-tested spatio-temporal covariance properties. We demonstrate superior performance for simple neuromorphic primitives in an event-based vision task compared to naïve artificial neural networks. The direction is exciting to us because

- We define mathematically coherent covariance properties, which are required to correctly handle signals in space and time,

- Use neuromorphic primitives (leaky integrator and leaky integrate-and-fire models) to outcompete a non-neuromorphic neural network, and

- Our results have immediate relevance in signal processing and event-based vision, with the possibility to extend to other tasks over space and time, such as memory and control.

What is a spatial receptive field?

A spatial receptive field can be thought of as a kernel that pattern matches

the incoming signal $f\colon \mathbb{R}^n \to \mathbb{R}$.

Formally, a spatial receptive field is the convolution integral of the input signal with a kernel $g$.

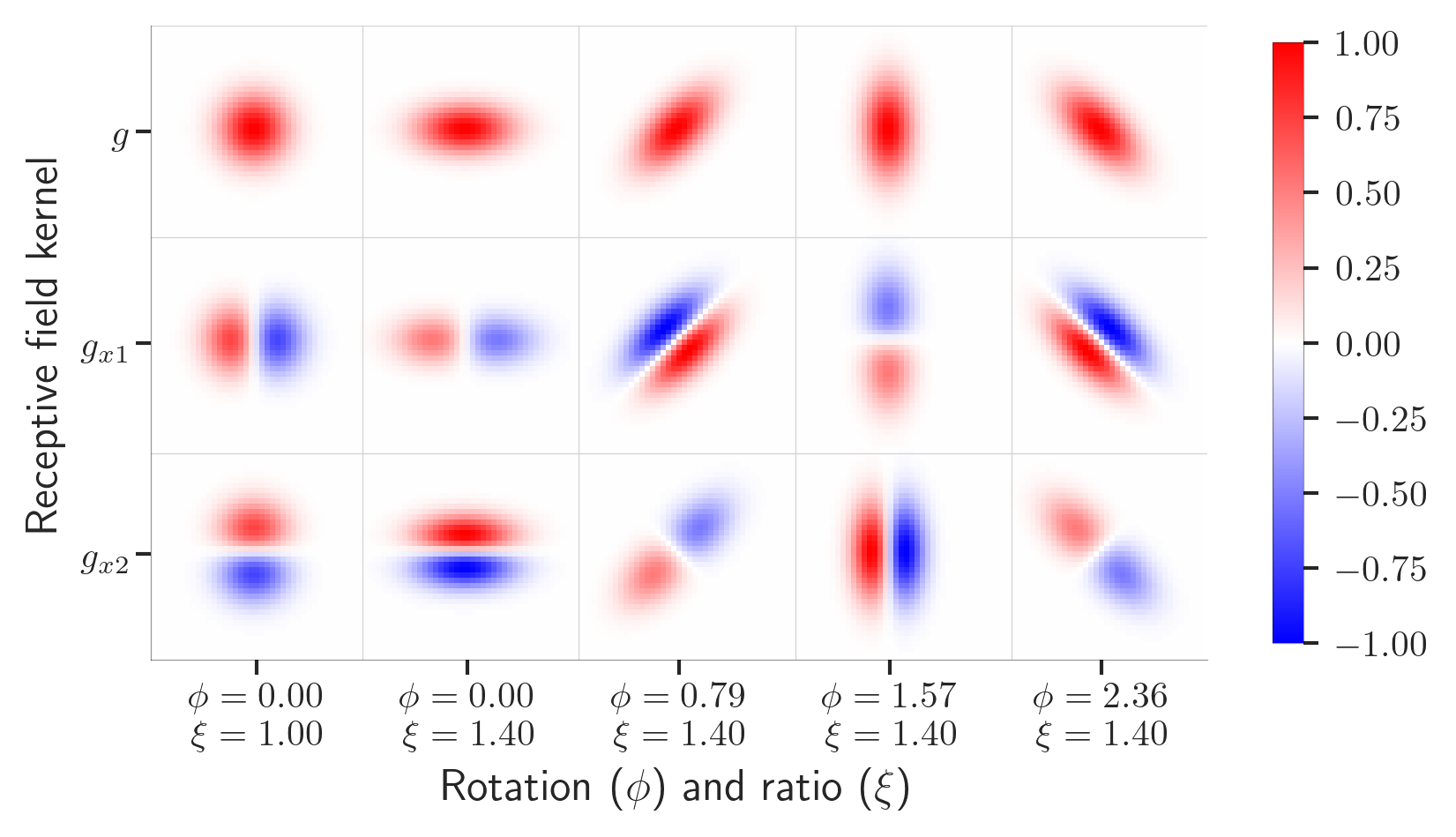

In our case, we use Gaussian derivatives, which are a heavily exploited in biological vision. Gaussian kernels are also known to be covariant to affine transformations if we parameterize them correctly, that is, rotates, stretches, and derives them in various ways to accurately match all the possible types of transformations in the image plane. Below you see some examples of spatial receptive fields, where a gaussian kernel (upper left) is derived in two axes (rows 2 and 3), as well as skewed and rotated (columns 2 to 5). Each kernel will provide a unique response to the input signal, which exactly codes for the transformation in the image plane.

What is a temporal receptive field?

In time, this looks a little different. Here, we impose a causality assumption on our signal: we cannot integrate from $-\infty$ to $\infty$ in time, because we don’t know the future.

\[(h * f) (t) = \int_{u \in \mathbb{R} = 0}^\infty h(u)\ f(t - u) du\]That limits our choice of $h$, and we instead have to integrate with an exponentially truncated kernel like the video below

\[h(t;\, \mu) = \begin{cases} \mu^{-1}\exp(-t/\mu) & t \gt 0 \\ 0 & t \leqslant 0 \end{cases}\]Imagine applying this kernel to a moving object in time.

If the time constant \(\mu\) is large, the kernel will smear

out the signal over time, similar to the video below

This is a pattern matching operation in time, similar to the spatial case.

Combining spatial and temporal receptive fields

Thanks to the excellent work by Tony Lindeberg on scale-space theory, we have a principled way to combine the covariance properties of the spatial and temporal receptive fields (see in particular his publications on normative receptive fields, temporal causality and temporal recursivity, and his video explaining time-causal and time-recursive receptive fields). Our work extends this theory to spiking networks for neuromorphic computing. We also make use of affine Gaussian derivative kernels over the spatial domain, as opposed to using regular Gaussian derivatives, based on isotropic spatial smoothing kernels, which we presented in previous work.

Concretely, we both want the spatial (\(x\)) and temporal (\(t\)) signal subject to some spatial transformation \(A\) and temporal scaling operation \(S_t\) to be covariant under a given scale-space representation \(L\).

Neuromophic spatio-temporal receptive fields

The main message of our paper is the fact that we can get exactly this spatio-temporal covariance property by using neuromorphic neuron models. This works for the leaky integrator, because it is exactly a truncated exponential kernel. More generally, we show that the Spike Response Model (SRM) that presents computational neuron models as linearized systems exhibit the same covariance properties, even though the output can be truncated as binary spikes.

With that, we train our model on a generated event dataset that simulates moving geometric shapes in time with varying velocity. The less velocity, the sparser the signal. To a point where the signal is so sparse it is impossible to track the objects with the human eye.

The below figure shows the performance of our model compared to a non-neuromorphic neural network with ReLU units. The leaky integrator (LI) and the leaky integrate-and-fire (LIF) models outperform the ReLU network in most cases. Even the ReLU network that has access to multiple frames in the past (multi-frame (MF) vs single-frame (SF)).

For context, the sparse setting (velocity = 0.16) generates about 1‰ activations per timestep, out of which ~4 are shape-related activations per time step, against a backdrop of about 500 activations related to noise.

The results are exciting because we can capture covariance properties exclusively with leaky integrators and leaky integrate-and-fire neurons. In practice, our theory allows us to construct vision processing systems that guarantee to capture the transformations we need them to. And, eventually, we can deploy this on cheap and low-power neuromorphic hardware.

Acknowledgements

The authors gratefully acknowledge support from the EC Horizon 2020 Framework Programme under Grant Agreements 785907 and 945539 (HBP), the Swedish Research Council under contracts 2022-02969 and 2022-06725, and the Danish National Research Foundation grant number P1.

Citation

Our work is available as a preprint at arXiv:2405.00318 and can be cited as follows

@misc{pedersen2024covariant,

title={Covariant spatio-temporal receptive fields for neuromorphic computing},

author={Jens Egholm Pedersen and Jörg Conradt and Tony Lindeberg},

year={2024},

eprint={2405.00318},

archivePrefix={arXiv},

primaryClass={cs.NE}

}